Detecting and responding to a threat in the earliest stages of the Cyber Attack Lifecycle is the key factor in preventing a breach from becoming a detrimental incident.

LogRhythm User and Entity Behavior Analytics (UEBA) detects and neutralizes both known and unknown user-based threats in real time. According to Gartner, “User & Entity Behavioral Analytics looks at patterns of human behavior, and then apply algorithms and statistical analysis to detect meaningful anomalies from those patterns—anomalies that indicate potential threats.”

UEBA is embedded into the LogRhythm LogRhythm NextGen SIEM Platform to give you extensive visibility into insider threat, compromised accounts, and privilege abuse, that might have otherwise gone unnoticed. UEBA helps organizations reduce their mean time to detect (MTTD) a cyber threat to prevent that threat from turning to a damaging breach.

Click on images to enlarge

Figure 1: Cyber Attack Lifecycle

UEBA and AI Engine

UEBA functionality is built in to LogRhythm’s AI Engine, improving detection and response times to unknown threats. The AI Engine is powered by over a decade of development in the form of Machine Data Intelligence (MDI). MDI across several hundred devices drives the AI Engine rule logic. These rules are provided and continually updated by LogRhythm Labs.

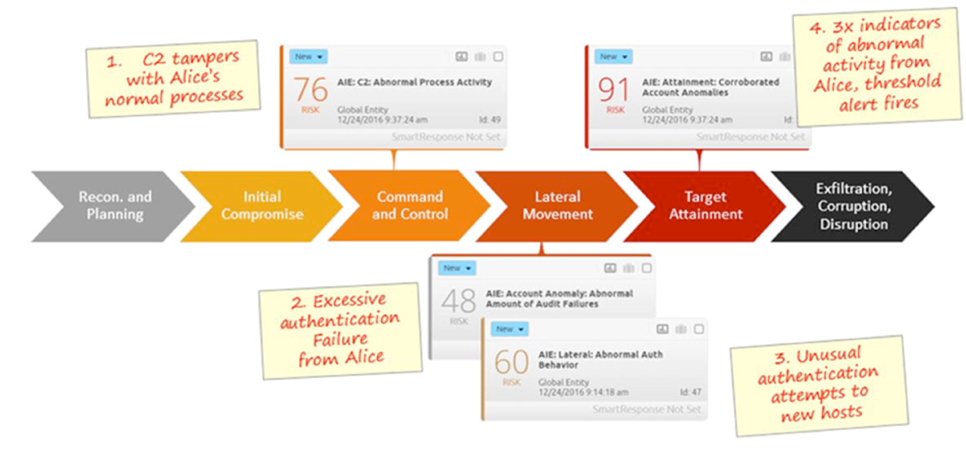

Let’s look at an example of the AI Engine taking action as a threat progresses to Target Attainment.

Attainment: Corroborated Account Anomalies AI Engine Rule Chain

The Attainment: Corroborated Account Anomalies AI Engine Rule Chain utilizes data models and statistical trends to detect threat activities along the advancement to Attainment.

Figure 2: The Attainment: Corroborated Account Anomalies AI Engine Rule Chain Working Along the Cyber Attack Lifecycle

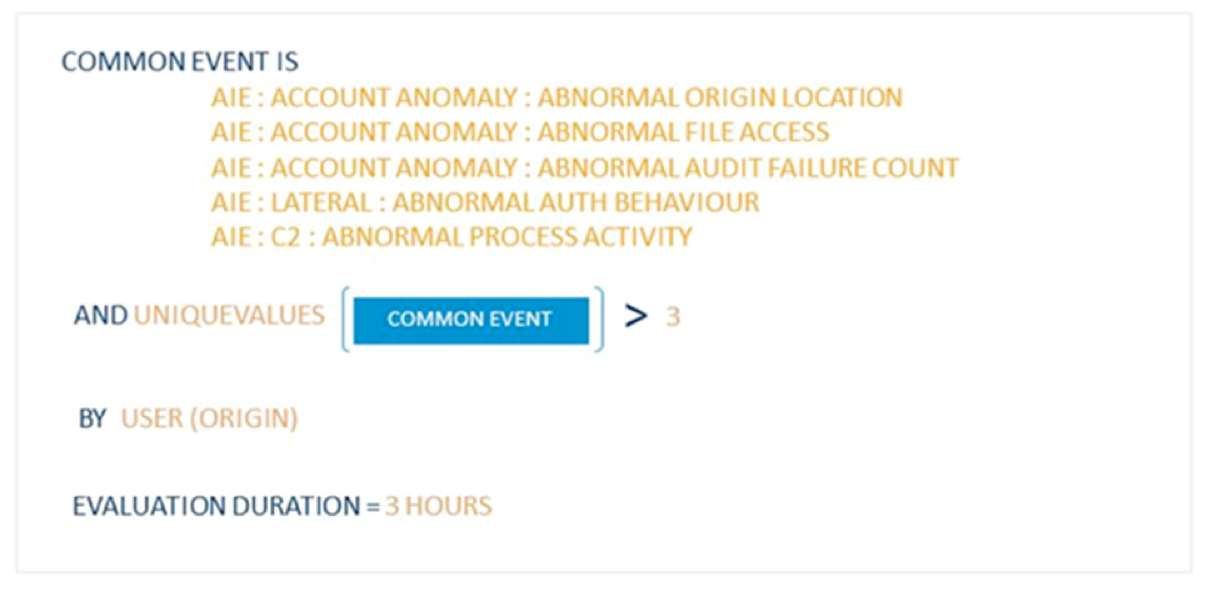

The high-priority Attainment: Corroborated Account Anomalies AI Engine alert has a risk-based priority (RBP) of 91, making it a critical alarm. However, it is a composite alarm that can fire as a result of the activity of several other AI Engine types or, in AI Engine terms, this is a loopback alarm.

Figure 3: The Attainment: Corroborated Account Anomalies AI Engine Alert as a Loopback Alarm

The Attainment: Corroborated Account Anomalies AI Engine alarm also contains a unique values rule block. In this rule logic, a unique values equation is used to determine if three or more AI Engine-generated common events for the same origin user have occurred within a three-hour period.

Figure 4: The Attainment: Corroborated Account Anomalies AI Engine Alert Rule Logic with a C2: Abnormal Process Activity Event Observed

If multiple AI Engine common events for the same user occur, the Attainment: Corroborated Account Anomalies alert will fire. As seen in Figure 4, the first matching event that was observed was C2: Abnormal Process Activity.

C2: Abnormal Process Activity

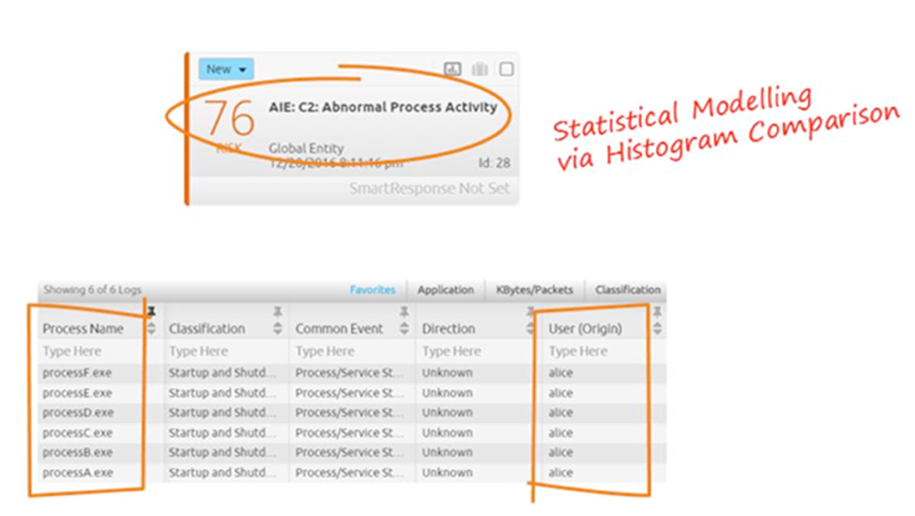

Figure 5: User Alice Has Some Anomalous Process Activity

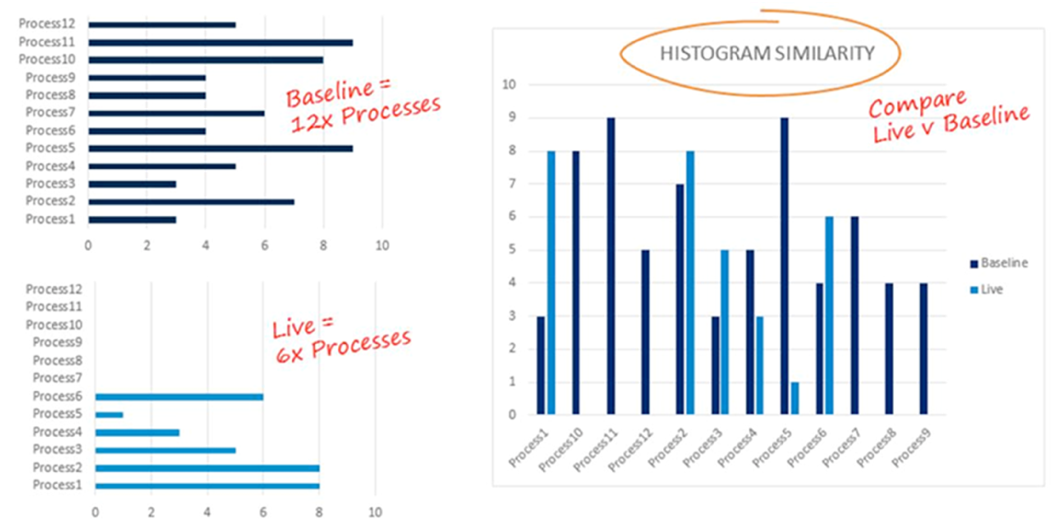

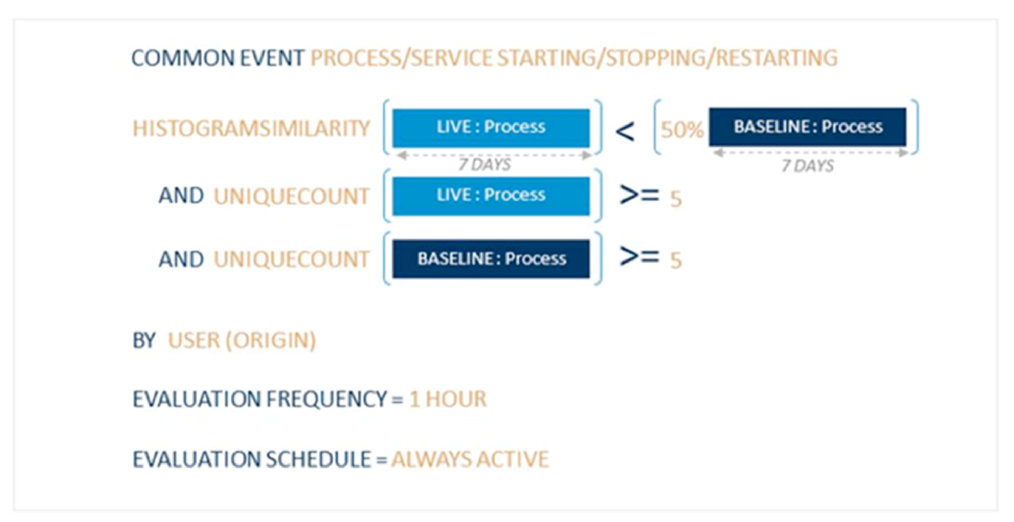

The C2: Abnormal Process Activity rule employs a statistical model driven by a histogram comparison. More on the mechanics of that shortly.

It’s apparent from the risk-based prioritized 76 alarm (seen above in Figure 5) that the user (Alice) has some anomalous process activity. The histogram similarity function within this AI Engine rule block is used to compare non-numeric fields’ likeness. The function compares a baseline of seven days’ worth of user process against that of seven days of user live process activity. If there’s a 50% reduction in similarity for a given user account, the alarm logic condition is met and it will fire.

To visualize what’s happening behind the scenes, we can create two graphs to represent the baseline and live process activity. In this example, we’ll take a look at the activity of the specific user, Alice, and compare the similarities of each graph.

Figure 6: Alice’s Live vs. Baseline Activity Comparison

The C2: Abnormal Process Activity AI Engine rule logic can be seen below in Figure 7. This includes the various dimensions of the model that are built up, such as the common event and user dimensions.

To ensure there is an adequate amount of data to evaluate, a unique count function is used to guarantee there are live and baseline processes in place. This prevents an alert due to lack of metadata in either model.

Figure 7: The C2: Abnormal Process Activity AI Engine Rule Logic

Finally, we can see that AI Engine builds upon LogRhythm’s MDI and gathers data from a myriad of log source devices that report process activity. These reports come from LogRhythm’s own endpoint agent–the system monitor, native operating system audit logs, and third-party endpoint agents.

Account Anomaly: Abnormal Amount of Audit Failures

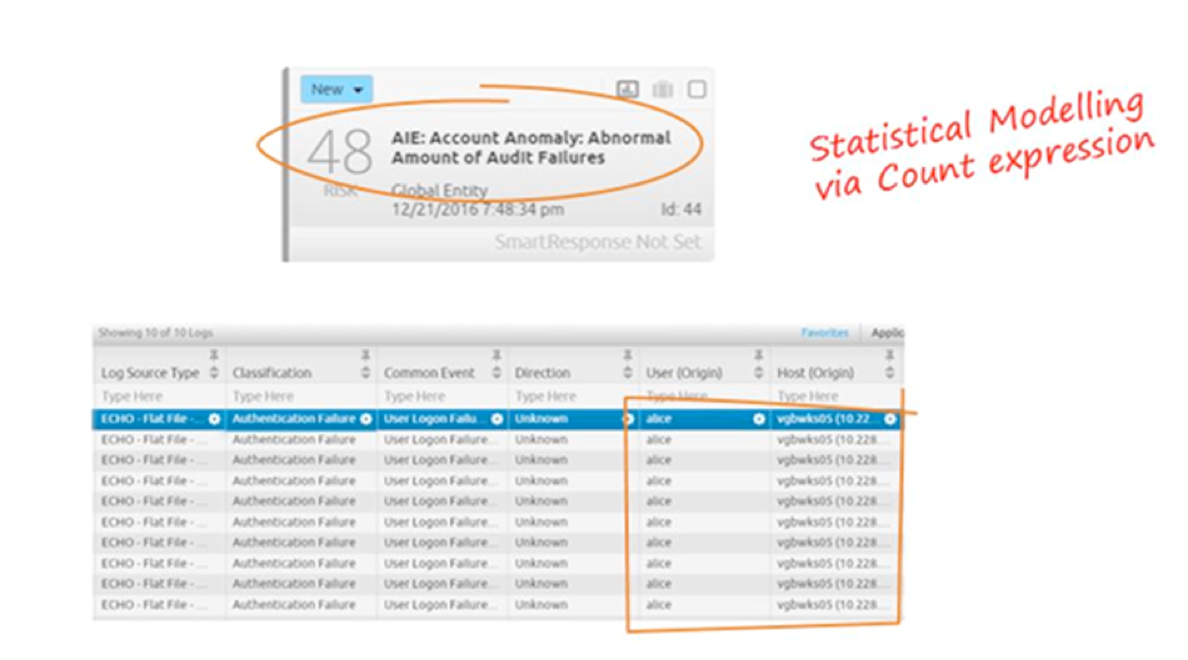

The next rule in the progression to Attainment chain is the Account Anomaly: Abnormal Amount of Audit Failures. This AI Engine alarm applies statistical modeling via a count expression. From the alarm card and drilldown data, Alice has been observed to have had an abnormal amount of audit failures.

Figure 8: Account Anomaly: Abnormal Amount of Audit Failures Alarm Fires as Alice is Seen to Have an Abnormal Amount of Audit Failures

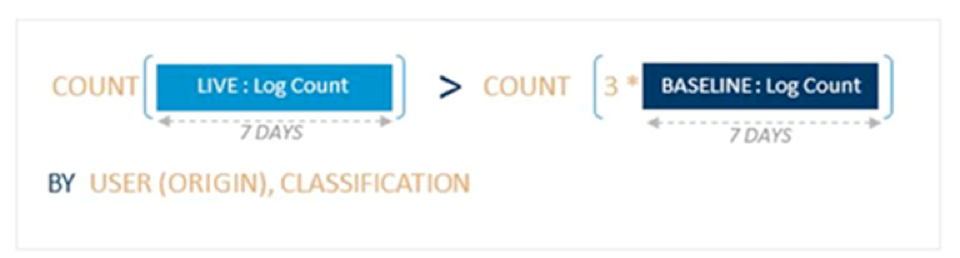

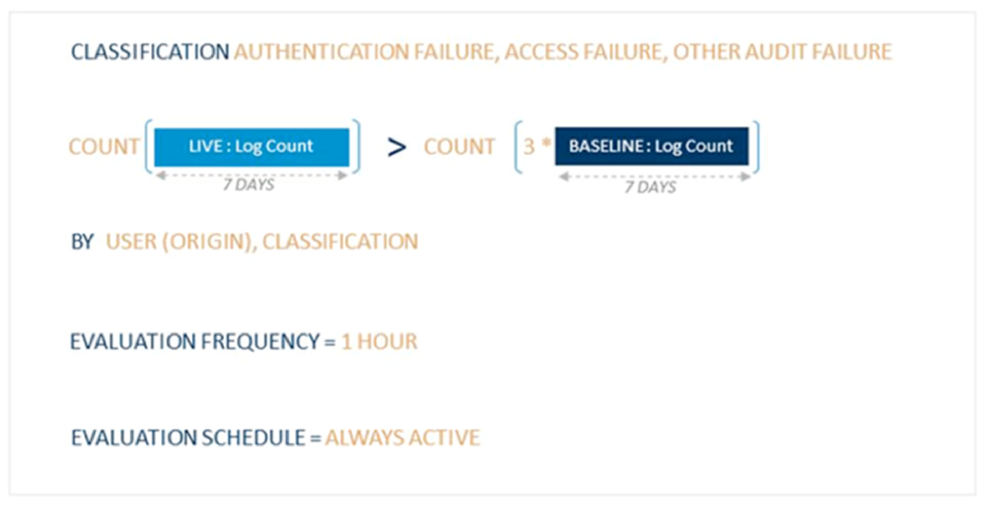

The count expression compares the log count in the live and baseline periods with optional multipliers and offsets. The Account Anomaly: Abnormal Amount of Audit Failures AI Engine rule compares seven days of baseline against a seven-day live period by user and classification. The rule logic is met if the live period is three times greater than the baseline.

Figure 9: The Account Anomaly: Abnormal Amount of Audit Failures AI Engine Rule

Figure 10: The Data Model Occurring in the AI Engine for the Account Anomaly: Abnormal Amount of Audit Failures

In this data model, each user and classification type has a live (light blue) and baseline (dark blue) count. In this visualization, the user’s (Chuck’s) live log count of audit failures is three times that of his baseline.

Figure 11: Full Account Anomaly: Abnormal Amount of Audit Failures AI Engine Rule Logic

Lateral: Abnormal Authentication Behavior

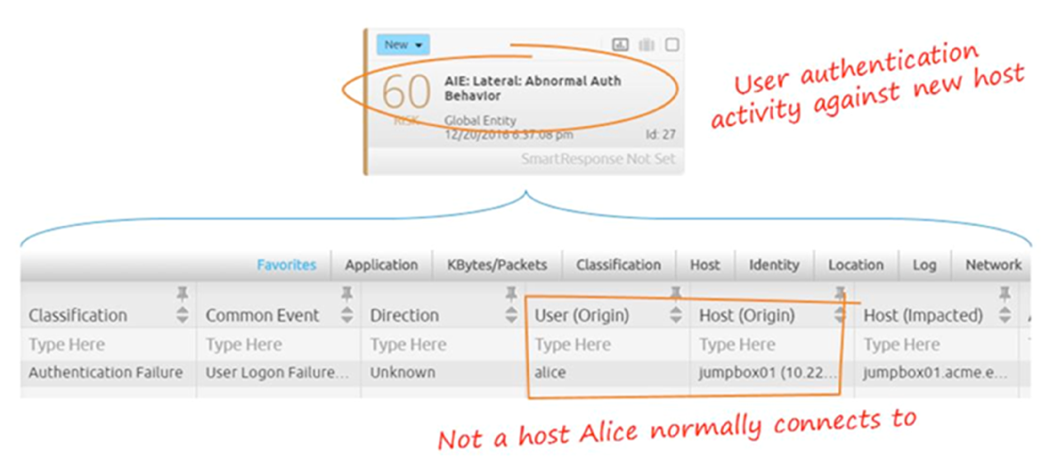

The third alarm in the progression to the Attainment rule chain that is fired is the Lateral: Abnormal Authentication Behavior alarm. Again, in this case, the alarm card and drill down show Alice has attempted to authenticate against a host she normally does not.

Figure 12: Alice Has Attempted to Authenticate Against a Host She Normally Does Not

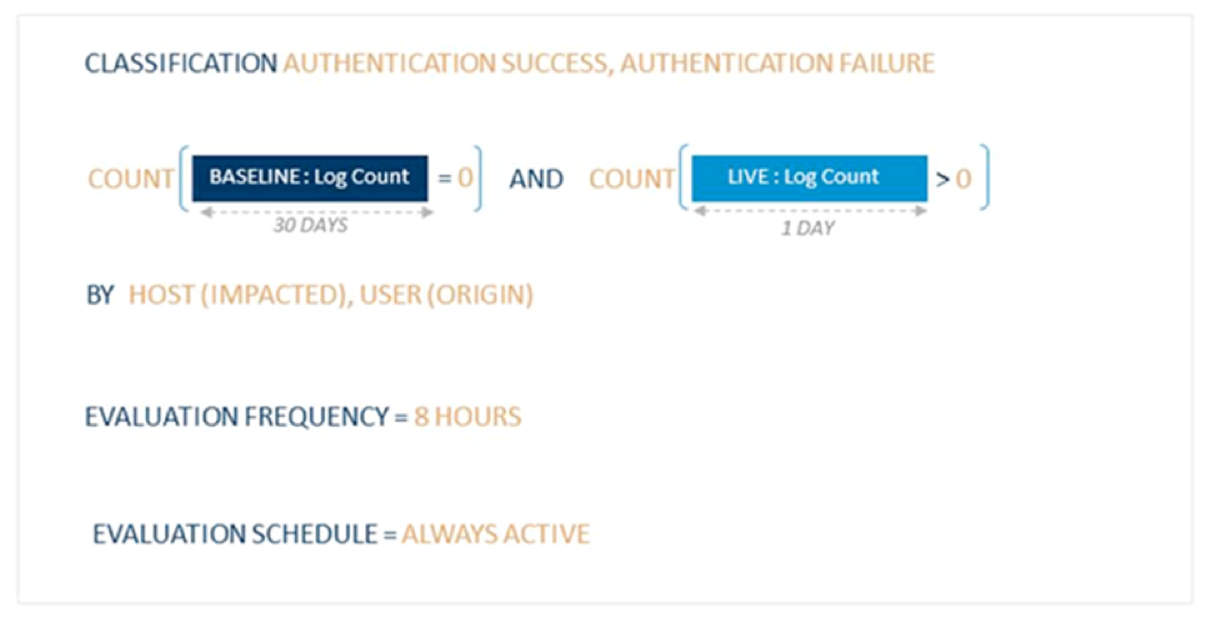

The Lateral: Abnormal Authentication Behavior AI Engine rule logic uses a baseline and live period as per the previous rules. However, in this example, it builds a data model across a longer period of 30 days. A user will have a baseline count of 0 if they have not connected to a host in the last 30 days. An alert will be generated if this user connects to a new device.

Figure 13: The Lateral: Abnormal Authentication Behavior AI Engine Rule Logic

In itself, a user authenticating to a new host they’ve not accessed before or recently is not a security threat, but is anomalous.

When a user is matched up with the corroborated AI Engine alarms fired elsewhere in the chain, they will then meet the requirement of three events triggering the top level and original Attainment: Corroborated Account Anomalies AI Engine rule.

Click the button below to download the data sheet and learn more about how UEBA and AI Engine can help protect your enterprise from a damaging cyberattack.